Bot quality evaluation

Bot quality evaluation is a tool that allows you to test a bot on dialog sets.

Each dialog contains user requests and expected bot reactions. During a test, the tool compares the received bot reactions with the expected ones.

You can view test history and download detailed reports with the results.

- During a test, the bot performs real requests. Requests may exceed JAICP limits or third-party service quotas.

- You can only check text responses and bot states.

- You cannot check the bot’s reactions to events.

To evaluate the bot quality:

- Go to the Bot quality evaluation section.

- Prepare a file with the dialog set.

- Upload the dialog set.

- Run a test.

- View test history and the report.

File with dialog set

The dialog file must be in one of the formats:

-

XLS

-

XLSX

-

CSV

CSV file requirements-

Use one of the characters as a delimiter between fields:

-

comma:

,; -

semicolon:

;; -

vertical bar:

|; -

tab character.

-

-

If a value contains the delimiter, it must be enclosed in double quotation marks:

".

-

- Table

- CSV

| testCase | comment | request | expectedResponse | expectedState | skip | preActions |

|---|---|---|---|---|---|---|

| hello | /start | /Start | ||||

| hello | well, hello | Hello there! | /Hello | |||

| weather | What’s the weather? | /Weather |

testCase ,comment ,request ,expectedResponse ,expectedState ,skip ,preActions

hello , ,/start , ,/Start , ,

hello , ,"well, hello" ,Hello there! ,/Hello , ,

weather , ,What's the weather? , ,/Weather , ,

The file contains test cases that are used to evaluate the bot quality. A test case can consist of several steps. Each line of the file is one test case step.

Each test case describes a new session and a new client.

In the Dialog set fields article, you can learn more about the fields that must be in the file and how to fill them out.

To download an example file, select Download example of file with dialogs in the top right corner.

Upload dialog set

To upload a dialog set:

- Select Upload a file with dialogs.

- Specify the set name.

- Attach a file with dialogs.

- Select Save.

Run test

To run a test, select Run test on the panel with the dialog set.

- For a local JAICP project: the last version saved in the editor.

- For a project in an external repository: the last commit on the current branch.

Test history

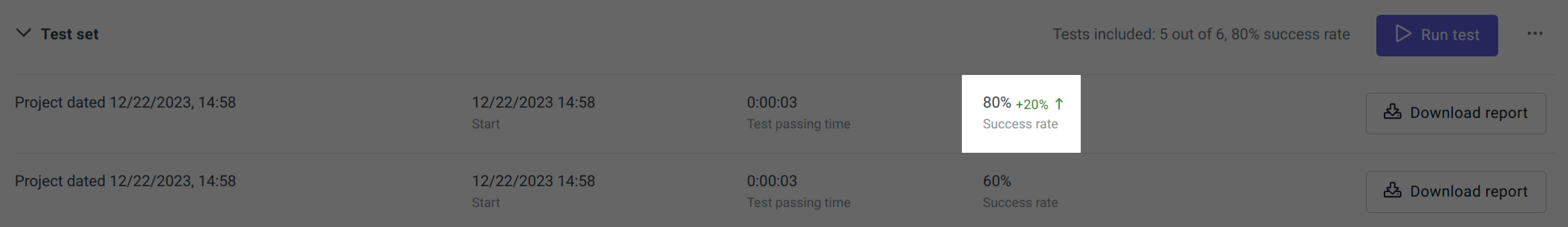

To open the test history, select a dialog set panel.

For each test, the Success rate is displayed. It is the percentage of successful steps out of the total number of non-skipped steps.

To view the chart with test success dynamics:

-

On the dialog set panel, select .

-

Select View dynamics.

Chart example:

Report

To download a detailed test report, select Download report.

The report is in CSV format. The report contains the dialog set and additional fields:

-

actualResponseis the received bot response. -

actualStateis the first state that the bot transitioned to. -

resultis the result of the step check. Values:OK: the expected bot reaction matched the received one.FAIL: the expected bot reaction did not match the received one.SKIPPED: the step was skipped and not checked.

-

transitionis the history of bot state transitions in the step. -

responseTimeis the duration of the step in milliseconds.

Report example

- Table

- CSV

| testCase | comment | request | expectedResponse | expectedState | skip | preActions | actualResponse | actualState | result | transition | responseTime |

|---|---|---|---|---|---|---|---|---|---|---|---|

| hello | /start | /Start | true | SKIPPED | 0 | ||||||

| hello | well, hello | Hello there! | /Hello | false | Hello there! | /Hello | OK | /Hello | 1424 | ||

| weather | What’s the weather? | /Hello/Weather | false | hello | In what city? | /Hello/Weather | OK | /Hello/Weather | 468 | ||

| alarm | Set an alarm | /Hello/Alarm | false | hello | For when? | /Hello/Meeting | FAILED | /Hello/Meeting→/Hello/Time | 491 |

testCase ,comment ,request ,expectedResponse ,expectedState ,skip ,preActions ,actualResponse ,actualState ,result ,transition ,responseTime

hello , ,/start , ,/Start ,true , , , ,SKIPPED , , 0

hello , ,"well, hello" ,Hello there! ,/Hello ,false , ,Hello there! ,/Hello ,OK ,/Hello , 1424

weather , ,What's the weather? , ,/Hello/Weather ,false ,hello ,In what city? ,/Hello/Weather ,OK ,/Hello/Weather , 468

alarm , ,Set an alarm , ,/Hello/Alarm ,false ,hello ,For when? ,/Hello/Meeting ,FAILED ,/Hello/Meeting->/Hello/Time , 491

The hello test case:

- The user says “/start”.

Expected reaction: the bot transitions to the

/Startstate. The step is skipped and not checked becauseskipis set totrue. - The user says “well, hello”.

Expected reaction: the bot transitions to the

/Hellostate and replies “Hello there!”. The received state and response match the expected ones. The step is considered successful.

The weather test case:

- The

preActionssteps from thehellotest case are performed. - The user says “What’s the weather?”.

Expected reaction: the bot transitions to the

/Hello/Weatherstate. The received state matches the expected one. The response text is not checked because theexpectedResponsefield is empty. The step is considered successful.

The alarm test case:

- The

preActionssteps from thehellotest case are performed. - The user says “Set an alarm”.

Expected reaction: the bot transitions to the

/Hello/Alarmstate. The received state does not match the expected one. The step is considered unsuccessful.